The AmpleAI plugin is a plugin developed by Amplenote staff to allow users to tap into the rapidly growing potential of large language models (henceforth, LLMs) in the 2020s. The most updated plugin documentation is located on the plugin's marketplace page, but that documentation is left somewhat brief. More robust detail on the functionality offered by the plugin can be found on this page.

link🪄 AmpleAI: Features

Functionality for AmpleAI comes in many flavors. In the list that follows, functionality is separated by how it is invoked.

link🔍 Search Agent

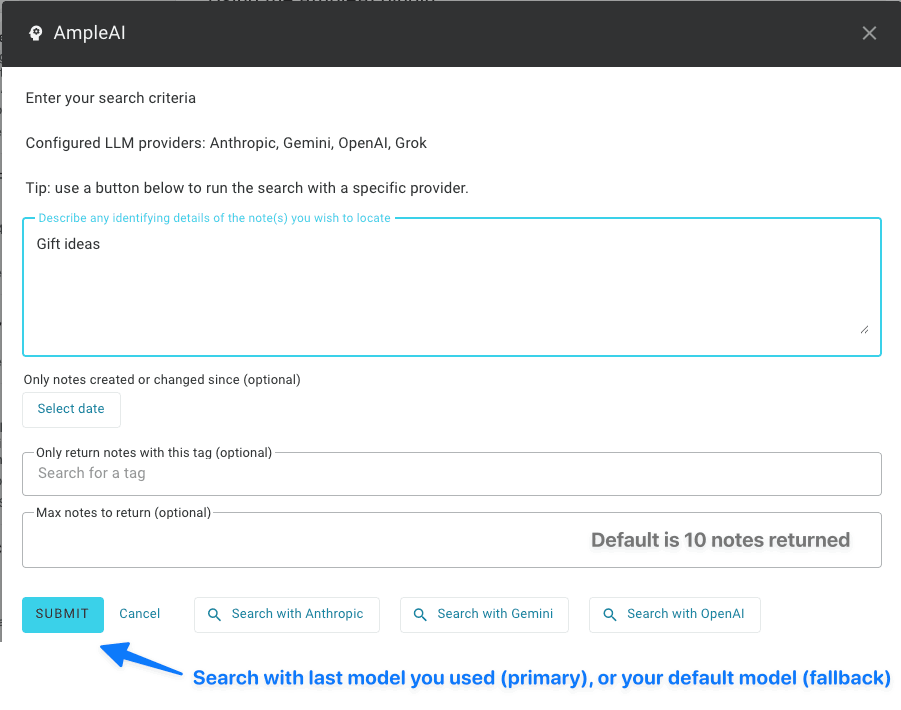

Looking to find all the historical notes that you have taken on a specific topic? AmpleAI Search Agent helps you tap into the cumulative wisdom & experience of your past selves. Open it via the slash menu with /search, or trigger Quick Open (Cmd-O or Ctrl-O) and start typing "search" to find the AI Search Agent option from AmpleAI. After opening Search Agent, you'll get a handful of optional fields. All you need to fill out is a couple words in the first field, but you have the option to tailor your search results more precisely if you choose additional options:

Options to control which notes are returned by search

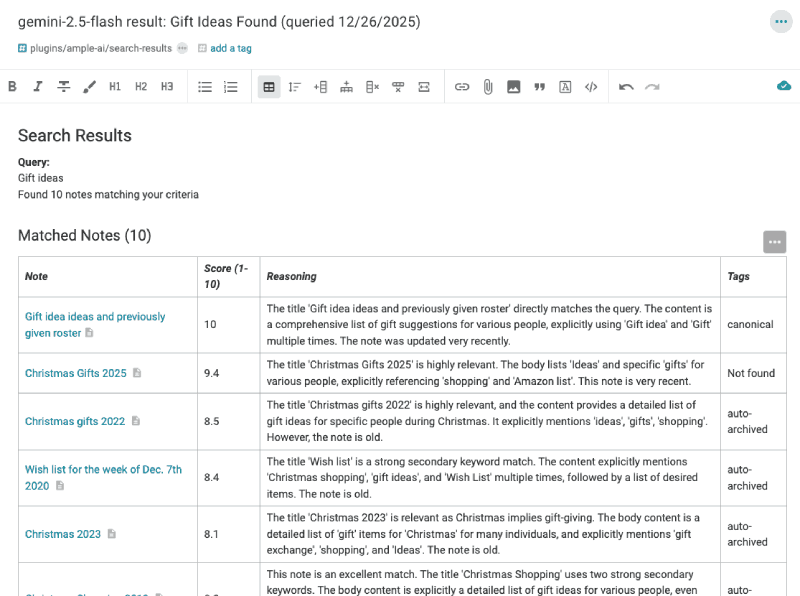

After triggering a search, Amplenote's agent will run through a series of steps to generate likely keywords for the input you gave, then collect the notes that best align with what you requested. You can click under the "Plugins" heading on the "AmpleAI" link to see how progress is coming on your search. Most searches takes around 30 seconds to complete. When the search is complete, you'll see the "Plugin Activity" spinner disappear, and AmpleAI will generate a note to summarize the best matches it could find.

Perusing notes most relevant to the query, though few include the original query in them

If you don't get the notes you hoped for, you can always try again with a different agent, assuming you have used the Add Provider API key slash menu or "Quick Open" option to add API keys for more than one LLM. They all have distinct ideas about how to decompose your input, so there can be interesting variations when searching on a topic that has many possible matches. Consider returning more than 10 notes if you're trying to undertake deep research on a topic you've written about extensively.

Use it to:

Link together research/topics (no tags required). Search Agent's summary notes are an "AI auto-tagger" on steroids. If you don't delete it, the table of note links that are generated in your search result will be visible from the backlinks of every result note. This ensures that (in addition to making related notes more findable), when you view any of the notes in Graph Pane, related content is nestled together

Discover poorly named/untagged notes. You're about to start writing a note, when you sudden get the feeling you've written about a similar topic before. The problem is, you have no idea what name you gave to that note. Search Agent to the rescue: it will look for several permutations of whatever detail you provide.

Be lazier about naming your notes. Related to the previous point: since Search Agent can find any related notes even if the note title is spartan, you don't need to spend extra time on the chore of trying to brainstorm every possible keyword for a note you want to find later. For example, if you title your note "Christmas 2027" and you later search for "Gift ideas," Search Agent will link to the "Christmas 2027" note as having content related to "Gift Ideas." That means any time you look up "gift ideas" in Quick Open in the future, you'll find a note (the search summary) that guides you to your "Christmas 2027" note.

linkApp Option (Slash menu or "Quick Open") Features

Features accessed by either typing slash, then starting to type the command in literal text from each item below, OR by invoking Quick Open, then typing the command.

Add Provider API key. Add API keys for Google/Gemini, OpenAI/ChatGPT, Anthropic/Claude, Grok, or Deepseek. Links to retrieve your API key are available within the dialog. By default, we will use the latest model for each provider that you pick, so we recommend that you leave empty the available "Preferred AI models (comma separated)" setting field.

AI Search Agent. See usage notes in the 🔍 Search Agent section.

Question & answer aka Answer. Ask a question to your LLM of choice.

Converse/Chat aka Converse (chat) with AI. Have a back-and-forth conversation that remembers the previous responses.

Show AI Usage by Model. See how much various LLMs have been called since Amplenote was last restarted.

Look up available Ollama models. Confirm whether Ollama was installed & started such that it can be queried by AmpleAI.

linkText Selection Features

Features invoked by selecting text and choosing an option from the rightmost icon in the toolbar that pops up.

Thesaurus. Get 10 suggested synonyms that make sense in the context around the word.

Answer question. If your highlighted text appears to be a question, you'll have the option to ask the AI for an answer to that question.

Complete a sentence. What text seems like it should come after the selected text?

Revise. How could the selected text be improved?

Rhymes with. What are 10 words that rhyme with the selected word?

linkNote option features

Features available by clicking the triple dot menu when a note is open.

Sort groceries. Given a task or bullet-list of grocery items, arrange them by the aisle they can be found in the grocery store

Revise. Suggest revisions to the entire note.

Summarize. Summarize the text of the open note.

linkEvaluation/Insert text features

Features invoked by entering an open bracket { and typing one of the following.

Complete. Answer a question or finish a thought from the words prior to this being invoked.

Continue. Continue in a similar style with the text that preceded "Continue" being invoked.

Image from preceding. Generate a Dall-e-2 or Dall-e-3 image.

Image from prompt. Generate a Dall-e-2 or Dall-e-3 image from a prompt that you enter after selecting this.

Suggest tasks. Based on the note title and contents, suggest relevant tasks to undertake.

Check back regularly, we plan to add new AmpleAI features every month of 2026. ✨

link🏦 Search models available

API Label | Provider | Input Price ($/1M) | Output Price ($/1M) | Release Date | Notes |

GPT-5.2 (Default) | OpenAI | $1.75 | $14.00 | Aug 2025 | Flagship agentic model. Cached input: $0.175. |

GPT-5.2 Pro | OpenAI | $21.00 | $168.00 | Aug 2025 | High-precision/Zero-failure tier. No cached discount listed. |

GPT-5 Mini | OpenAI | $0.25 | $2.00 | Aug 2025 | High-throughput. Cached input: $0.025. Replaces GPT-4o mini. |

GPT-4.1 | OpenAI | $3.00 | $12.00 | Apr 2025 | Mid-cycle update. Cached input: $0.75. Fine-tuning available. |

GPT-4.1 Mini | OpenAI | $0.80 | $3.20 | Apr 2025 | Cached input: $0.20. |

GPT-4.1 Nano | OpenAI | $0.20 | $0.80 | Apr 2025 | Edge/Ultra-light tier. Cached input: $0.05. |

o4-mini | OpenAI | $1.10 | $4.40 | Apr 2025 | Reasoning model. Cached input: $0.28–$1.00 depending on region. |

o3 | OpenAI | $2.00 | $8.00 | ~Apr 2025 | Global pricing. Cached input: $0.50. |

o3-pro | OpenAI | $20.00 | $80.00 | June 2025 | Specialized reasoning. Note: Sources indicate variance ($20-$60). |

o1 | OpenAI | $15.00 | $60.00 | Dec 2024 | Early reasoning flagship. Cached input: $7.50. |

Gemini 3.0 Pro (Default) | ~$1.25* | ~$10.00* | Nov 2025 | Est. based on 2.5 Pro pricing & "Paid Tier" tiers. | |

Gemini 2.5 Pro | $1.25 | $10.00 | June 2025 | Standard PayGo. Grounding add-ons extra. | |

Gemini 2.5 Flash | $0.10* | $0.40* | June 2025 | *Est. based on low-tier pricing blocks in.9 | |

Gemini 2.0 Flash | ~$0.10* | ~$0.40* | Feb 2025 | High-speed multimodal. | |

Grok 4 (Default) | xAI | $3.00 | $15.00 | July 2025 | Frontier reasoning model. Tool calls $5/1k. |

Grok 4.1 Fast | xAI | $0.20 | $0.50 | Nov 2025 | Market Floor. Price applies to reasoning & non-reasoning modes. |

Grok 3 | xAI | $3.00 | $15.00 | Feb 2025 | Replaced by Grok 4. Pricing mirrored in legacy access. |

Sonar Pro | Perplexity | $3.00 | $15.00 | Feb 2025 | Includes search. Plus request fee ($6-$14/1k). |

Sonar | Perplexity | $1.00 | $1.00 | Feb 2025 | Llama 3.3 based. Plus request fee ($5-$12/1k). |

Sonar Deep Research | Perplexity | $2.00 | $8.00 | 2025 | Specialized for long-form report generation. |

Claude Opus 4.5 | Anthropic | $15.00 | $75.00 | Late 2025 | For heavy reasoning tasks |

Claude Sonnet 4.5 (Default) | Anthropic | Anthropic best for multipurpose workload | |||

Claude Opus 4 | Anthropic | $15.00 | $75.00 | Late 2025* | Data via comparative report 15; direct pricing page not in snippets. |

linkChoosing an AI backend

As of 2026, we recommend setting up AmpleAI with multiple AI providers, like OpenAI (ChatGPT), Anthropic (Claude), and Google (Gemini). You can call Add provider API key repeatedly to add keys for multiple providers. Since it seems that a new standard-setting model is released every quarter, it's wise to keep your AI alliances loose.

If you are a technical user, it's also possible to connect Ollama and interface with local models you've installed. Find more details on this option in "Setting up Ollama," below. Since the frontier models are so powerful, and inexpensive relative to the value they create, we do not recommend that typical users endure the busywork of running a local LLM.

Note that, at the moment, OpenAI is the only supported model for generating images. We plan to integrate with other providers' image & video generation services in Q1 2026.

linkExpress setup

If you have an existing account with one of the AI providers listed, all you need to do is to install the AmpleAI plugin, then run any of the features. You will be prompted for your LLM provider token. After you paste in your API key, AmpleAI will securely persist the key and enable its future use across any platform. 🚀

linkOpenAI setup

To get an OpenAI API key, sign up for OpenAI (https://platform.openai.com/signup), then visit OpenAI's API keys page (https://platform.openai.com/account/api-keys). If you find that all API calls fail, confirm that you have credits in your OpenAI account.

linkGemini, Anthropic, Grok, Deepseek setup

When you initiate the Add AI provider action after installing AmpleAI, we'll provide you the link to follow to retrieve your API key. It usually takes less than a minute if you have an account set up with the provider (5-10 minutes to set one up, if not).

linkOllama setup

To use the plugin with Ollama, start by installing Ollama from its download link. Then install an LLM using ollama run, for example, ollama run mistral (we have found that "mistral" seems to offer the best results as of early 2024). After Ollama is installed, you will need to stop the resident Ollama server (click Ollama icon in your toolbar and choose "Quit"), then open a console and run

You can test that your Ollama server has been started correctly by invoking Quick Open and running "Look up available Ollama models." If it doesn't work, run ps aux | grep ollama in console to find existing Ollama servers, and kill those before re-running the command with OLLAMA_ORIGINS specified.

link

linkSetting up Ollama

To use the plugin with Ollama, start by installing Ollama from its download link. Then install an LLM using ollama run, for example, ollama run mistral (we have found that "mistral" seems to offer the best results as of early 2024). After Ollama is installed, you will need to stop the resident Ollama server (click Ollama icon in your toolbar and choose "Quit"), then open a console and run OLLAMA_ORIGINS=https://plugins.amplenote.com ollama serve. You can test that your Ollama server has been started correctly by invoking Quick Open and running "Look up available Ollama models." If it doesn't work, run ps aux | grep ollama in console to find existing Ollama servers, and kill those before re-running the command with OLLAMA_ORIGINS specified.

linkAmpleAI Rationale & Roadmap

AmpleAI offers a growing toolbox of functionality for users that want to benefit from the latest cutting-edge developments in AI. The source code for this plugin is open source. Why have we chosen to avail critical AI functionality via a plugin, instead of including it in the default Amplenote installation? Many reasons, which you are free to skip if you don't care:

To inspire users to recognize the potential afforded by plugins. We want to give our programmer (or aspiring programmer)-users a living, breathing example of how powerful a plugin can be. In the long-term, this should foster a future Amplenote easy to shape and mold, like a Shopify store. AmpleAI proves that plugins offer a powerful customization platform, tailored to any esoteric use case.

Because AI is moving fast, we need the AI<->Amplenote connection to evolve equally fast. If we build & test an AI integration with our usual level of rigor, there is a good chance that in 6-12 months that code will be deprecated. It's generally harder to update & remove code within a large code base than a small one. By keeping our AI translation in a small git repo that anyone can visit, we can update it every month. We can also accept proposed code from anyone that wants to help the implementation improve.

There are no prompt engineering experts, so anyone might discover newer, more effective, prompts to contribute back to the code. Since AI in 2026 continues evolving with unprecedented speed, it would be foolish for the Amplenote dev team to presume we have a monopoly on how to craft the best LLM prompts. By making our implementation open source, any sufficiently motivated party can experiment with prompts, to help us identify progressively more effective prompts that evolve alongside the state-of-the-art LLMs.

No cost markups. By allowing users to choose which LLM to use on the backend, Amplenote users pay the lowest possible fees. Compared to platforms that gouge users with expensive markups over the OpenAI base costs, the Amplenote approach allows users to specifically tailor how and if they will be charged for their AI use.

Always looking for dogfood opportunities. The Alloy tagline is "Fine software products, dogfooded daliy by people who love building software." The reason we chose this tagline was that we believe most bad software originates when the leaders of a product aren't using the product. By making ourselves heavy users of our plugin API, the documentation and functionality of that API will evolve more reliably than if we weren't using it ourselves.

The bottom line is that getting started with the AmpleAI plugin is as simple as 1) log in to Amplenote 2) visit the AI plugin 3) click the "Install" button.

linkAmpleAI Source Repo

Want to fork the AmpleAI plugin, or navigate its full set of tests & functionality? Check out its open source Github repo here.